PyCaret is an open-source, low-code machine learning library that simplifies and standardizes the end-to-end machine learning workflow. Instead of acting as a single AutoML algorithm, PyCaret functions as an experiment framework that wraps many popular machine learning libraries under a consistent and highly productive API

This design choice matters. PyCaret does not fully automate decision-making behind the scenes. It accelerates repetitive work such as preprocessing, model comparison, tuning, and deployment, while keeping the workflow transparent and controllable.

Positioning PyCaret in the ML Ecosystem

PyCaret is best described as an experiment orchestration layer rather than a strict AutoML engine. While many AutoML tools focus on exhaustive model and hyperparameter search, PyCaret focuses on reducing human effort and boilerplate code.

This philosophy aligns with the “citizen data scientist” concept popularized by Gartner, where productivity and standardization are prioritized. PyCaret also draws inspiration from the caret library in R, emphasizing consistency across model families.

Core Experiment Lifecycle

Across classification, regression, time series, clustering, and anomaly detection, PyCaret enforces the same lifecycle:

setup()initializes the experiment and builds the preprocessing pipelinecompare_models()benchmarks candidate models using cross-validationcreate_model()trains a selected estimator- Optional tuning or ensembling steps

finalize_model()retrains the model on the full datasetpredict_model(),save_model(), ordeploy_model()for inference and deployment

The separation between evaluation and finalization is critical. Once a model is finalized, the original holdout data becomes part of training, so proper evaluation must occur beforehand

Preprocessing as a First-Class Feature

PyCaret treats preprocessing as part of the model, not a sidestep. All transformations such as imputation, encoding, scaling, and normalization are captured in a single pipeline object. This pipeline is reused during inference and deployment, reducing the risk of training-serving mismatch.

Advanced options include rare-category grouping, iterative imputation, text vectorization, pipeline caching, and parallel-safe data loading. These features make PyCaret suitable not only for beginners, but also for serious applied workflows

Building and Comparing Models with PyCaret

Here is the full Colab link for the project: Colab

Binary Classification Workflow

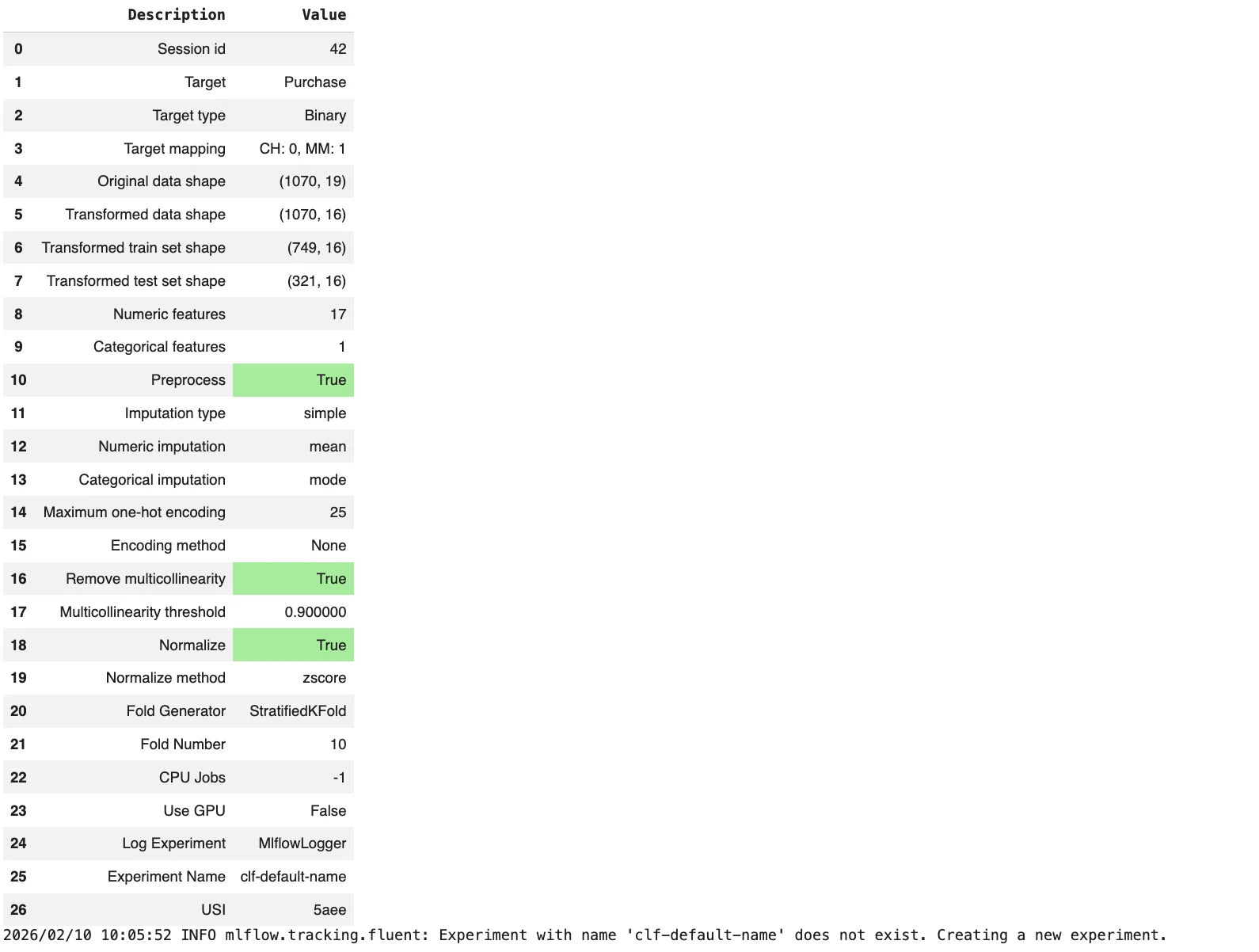

This example shows a complete classification experiment using PyCaret.

from pycaret.datasets import get_data

from pycaret.classification import *

# Load example dataset

data = get_data("juice")

# Initialize experiment

exp = setup(

data=data,

target="Purchase",

session_id=42,

normalize=True,

remove_multicollinearity=True,

log_experiment=True

)

# Compare all available models

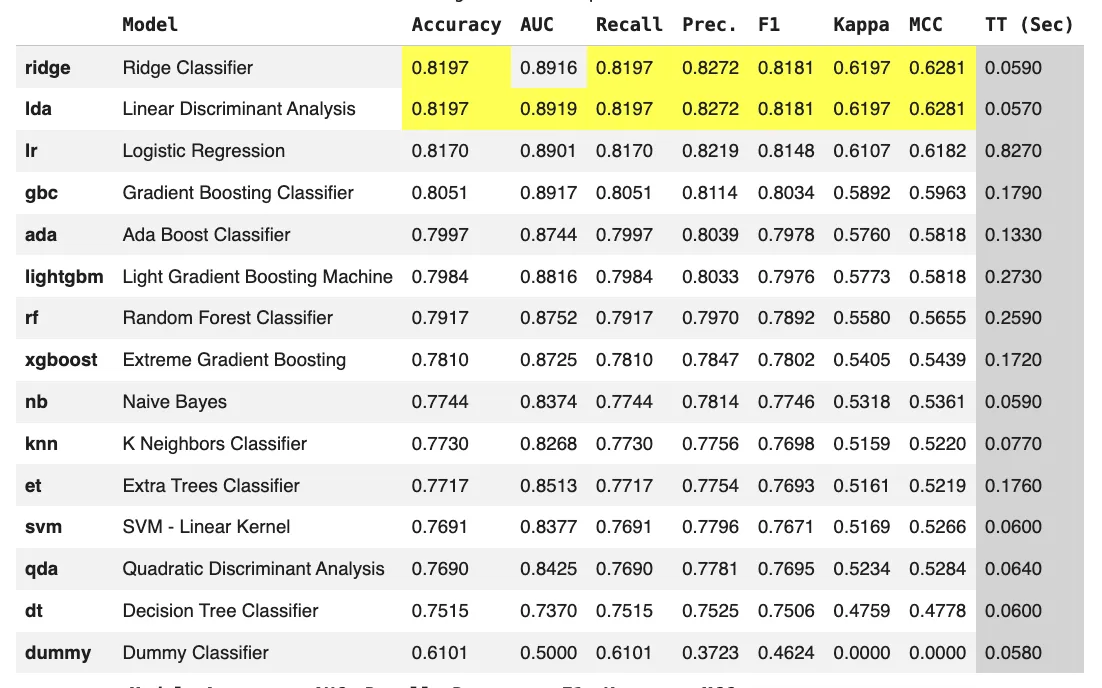

best_model = compare_models()

# Inspect performance on holdout data

holdout_preds = predict_model(best_model)

# Train final model on full dataset

final_model = finalize_model(best_model)

# Save pipeline + model

save_model(final_model, "juice_purchase_model")What this demonstrates:

setup()builds a full preprocessing pipelinecompare_models()benchmarks many algorithms with one callfinalize_model()retrains using all available data- The saved artifact includes preprocessing and model together

From the output, we can see that the dataset is dominated by numeric features and benefits from normalization and multicollinearity removal. Linear models such as Ridge Classifier and LDA achieve the best performance, indicating a largely linear relationship between pricing, promotions, and purchase behavior. The finalized Ridge model shows improved accuracy when trained on the full dataset, and the saved pipeline ensures consistent preprocessing and inference.

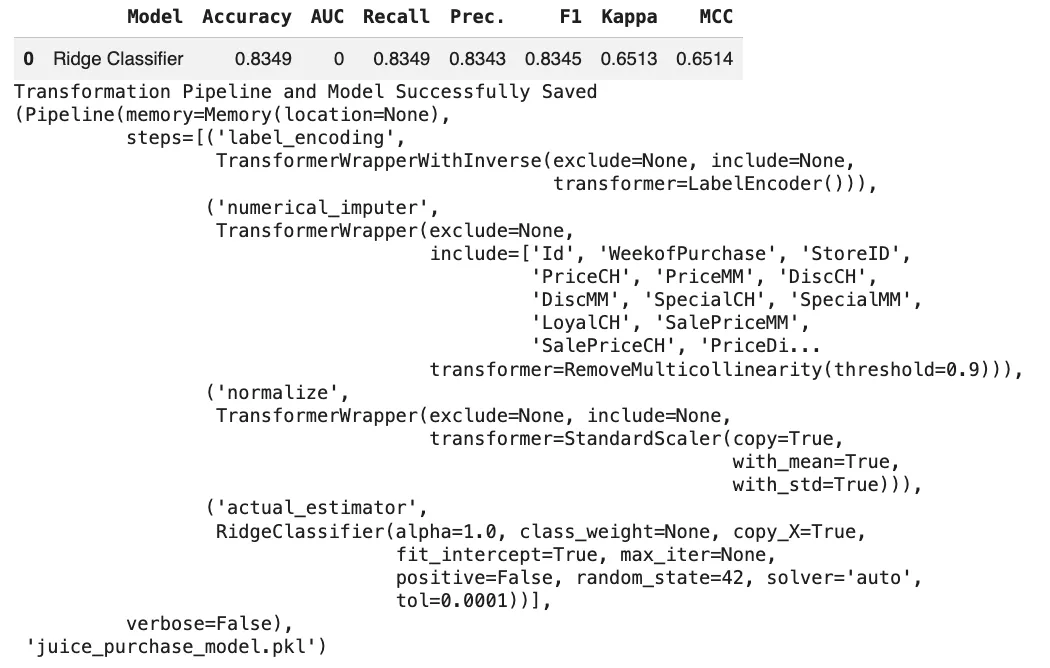

Regression with Custom Metrics

from pycaret.datasets import get_data

from pycaret.regression import *

data = get_data("boston")

exp = setup(

data=data,

target="medv",

session_id=123,

fold=5

)

top_models = compare_models(sort="RMSE", n_select=3)

tuned = tune_model(top_models[0])

final = finalize_model(tuned)Here, PyCaret allows fast comparison while still enabling tuning and metric-driven selection.

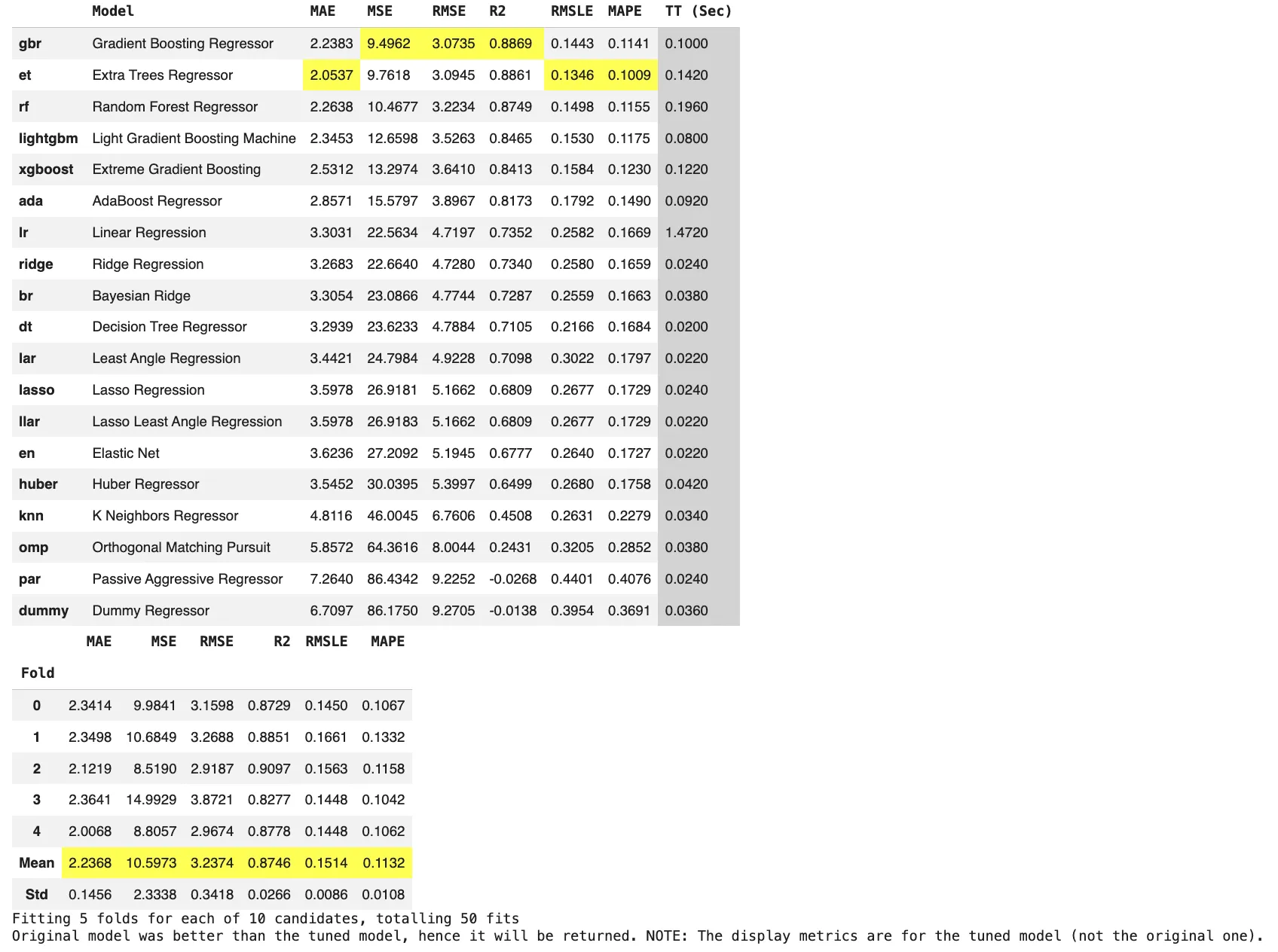

From the output, we can see that the dataset is fully numeric and well suited for tree-based models. Ensemble methods such as Gradient Boosting, Extra Trees, and Random Forest clearly outperform linear models, achieving higher R2 scores, and lower error metrics. This indicates strong nonlinear relationships between features like crime rates, rooms, location factors, and house prices. Linear and sparse models perform significantly worse, confirming that simple linear assumptions are insufficient for this problem.

Time Series Forecasting

from pycaret.datasets import get_data

from pycaret.time_series import *

y = get_data("airline")

exp = setup(

data=y,

fh=12,

session_id=7

)

best = compare_models()

forecast = predict_model(best)

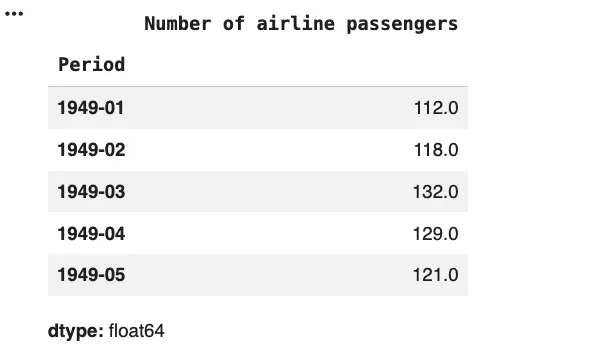

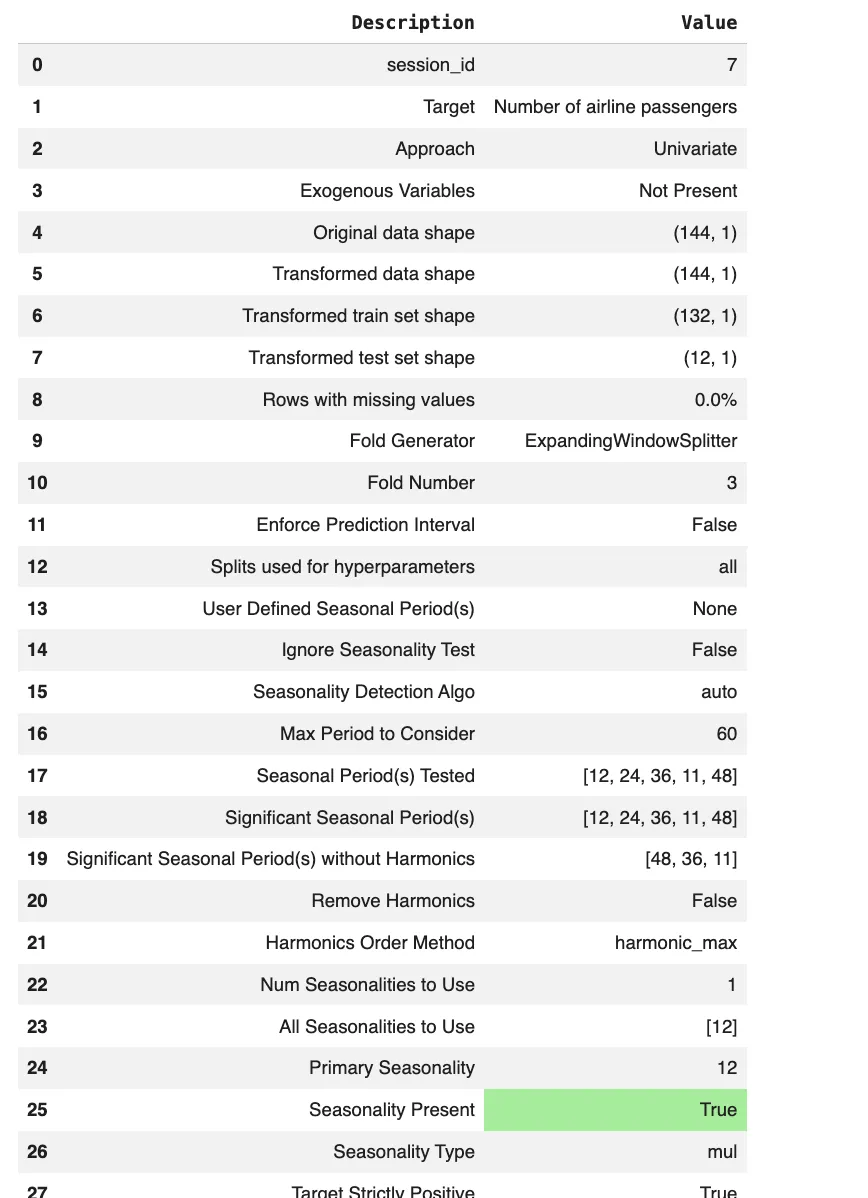

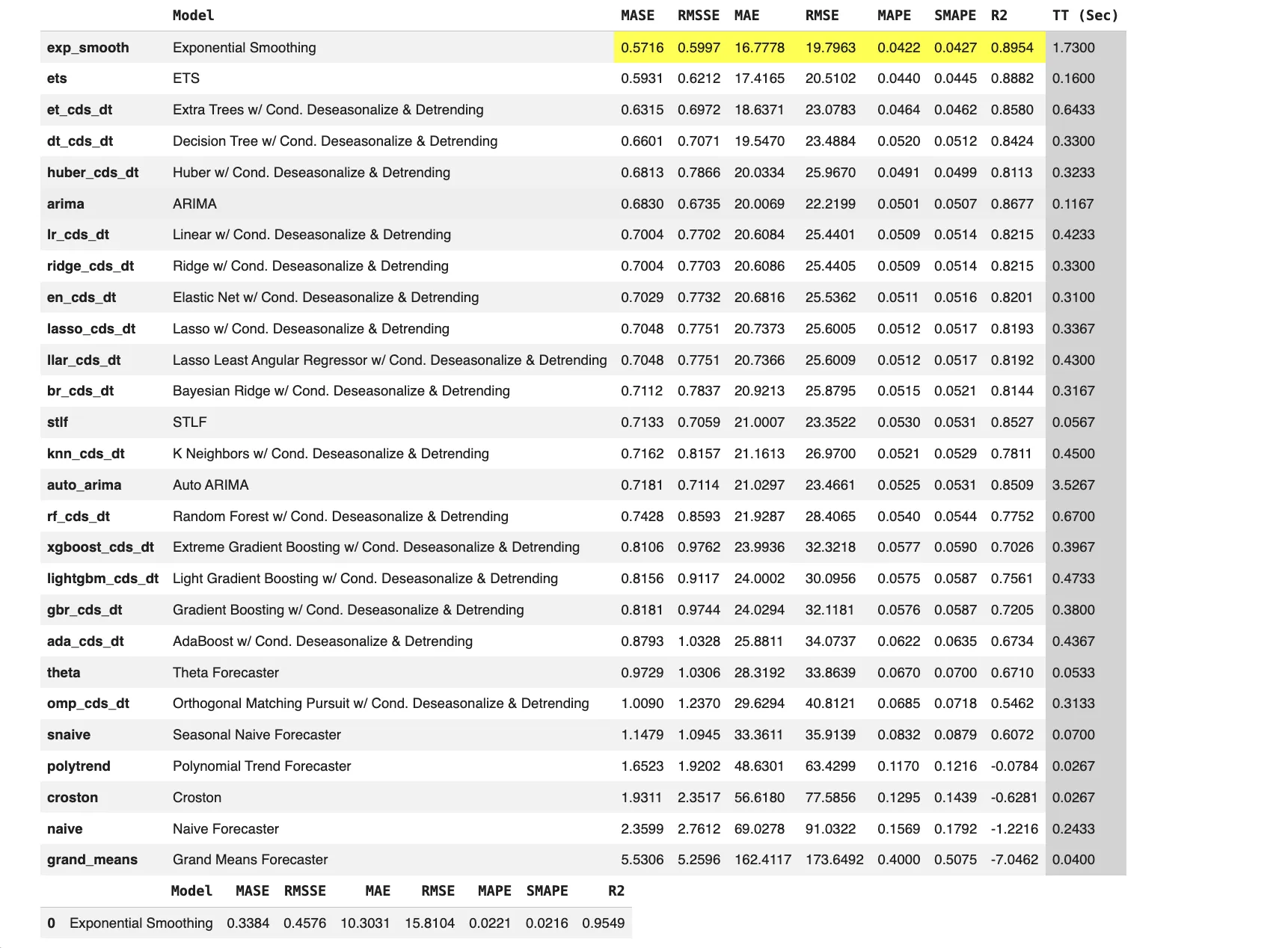

From the output, we can see that the series is strictly positive and exhibits strong multiplicative seasonality with a primary seasonal period of 12, confirming a clear yearly pattern. The recommended differencing values also indicate both trend and seasonal components are present.

Exponential Smoothing performs best, achieving the lowest error metrics and highest R2, showing that classical statistical models handle this seasonal structure very well. Machine learning based models with deseasonalization perform reasonably but do not outperform the top statistical methods for this univariate seasonal dataset.

This example highlights how PyCaret adapts the same workflow to forecasting by introducing time series concepts like forecast horizons, while keeping the API familiar.

Clustering

from pycaret.clustering import *

from pycaret.anomaly import *

# Clustering

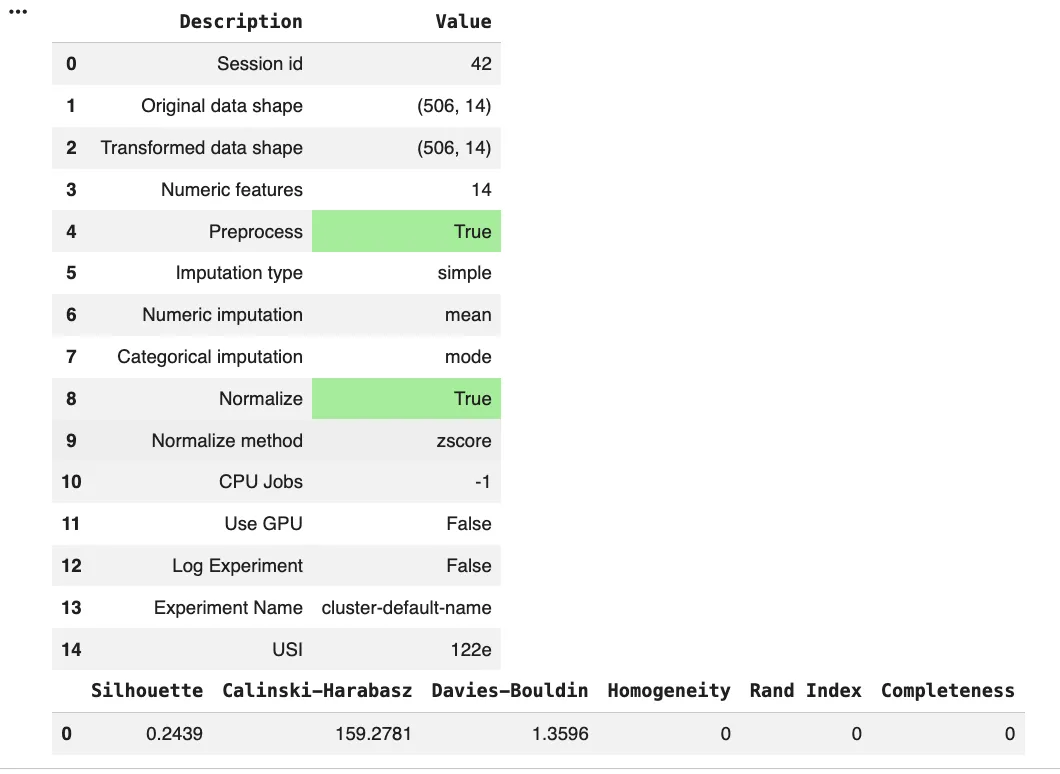

exp_clust = setup(data, normalize=True)

kmeans = create_model("kmeans")

clusters = assign_model(kmeans)

From the output we can see that the clustering experiment was run on fully numeric data with preprocessing enabled, including mean imputation and z-score normalization. The silhouette score is relatively low, indicating weak cluster separation. Calinski–Harabasz and Davies–Bouldin scores suggest overlapping clusters rather than clearly distinct groups. Homogeneity, Rand Index, and Completeness are zero, which is expected in an unsupervised setting without ground truth labels.

Classification models supported in the built-in model library

PyCaret’s classification module supports supervised learning with categorical target variables. The create_model() function accepts an estimator ID from the built-in model library or a scikit-learn compatible estimator object.

The table below lists the classification estimator IDs and their corresponding model names.

| Estimator ID | Model name in PyCaret |

| lr | Logistic Regression |

| knn | K Neighbors Classifier |

| nb | Naive Bayes |

| dt | Decision Tree Classifier |

| svm | SVM Linear Kernel |

| rbfsvm | SVM Radial Kernel |

| gpc | Gaussian Process Classifier |

| mlp | MLP Classifier |

| ridge | Ridge Classifier |

| rf | Random Forest Classifier |

| qda | Quadratic Discriminant Analysis |

| ada | Ada Boost Classifier |

| gbc | Gradient Boosting Classifier |

| lda | Linear Discriminant Analysis |

| et | Extra Trees Classifier |

| xgboost | Extreme Gradient Boosting |

| lightgbm | Light Gradient Boosting Machine |

| catboost | CatBoost Classifier |

When comparing many models, several classification specific details matter. The compare_models() function trains and evaluates all available estimators using cross-validation. It then sorts the results by a selected metric, with accuracy used by default. For binary classification, the probability_threshold parameter controls how predicted probabilities are converted into class labels. The default value is 0.5 unless it is changed. For larger or scaled runs, a use_gpu flag can be enabled for supported algorithms, with additional requirements depending on the model.

Regression models supported in the built-in model library

PyCaret’s regression module uses the same model library by ID pattern as classification. The create_model() function accepts an estimator ID from the built-in library or any scikit-learn compatible estimator object.

The table below lists the regression estimator IDs and their corresponding model names.

| Estimator ID | Model name in PyCaret |

| lr | Linear Regression |

| lasso | Lasso Regression |

| ridge | Ridge Regression |

| en | Elastic Net |

| lar | Least Angle Regression |

| llar | Lasso Least Angle Regression |

| omp | Orthogonal Matching Pursuit |

| br | Bayesian Ridge |

| ard | Automatic Relevance Determination |

| par | Passive Aggressive Regressor |

| ransac | Random Sample Consensus |

| tr | TheilSen Regressor |

| huber | Huber Regressor |

| kr | Kernel Ridge |

| svm | Support Vector Regression |

| knn | K Neighbors Regressor |

| dt | Decision Tree Regressor |

| rf | Random Forest Regressor |

| et | Extra Trees Regressor |

| ada | AdaBoost Regressor |

| gbr | Gradient Boosting Regressor |

| mlp | MLP Regressor |

| xgboost | Extreme Gradient Boosting |

| lightgbm | Light Gradient Boosting Machine |

| catboost | CatBoost Regressor |

These regression models can be grouped by how they typically behave in practice. Linear and sparse linear families such as lr, lasso, ridge, en, lar, and llar are often used as fast baselines. They train quickly and are easier to interpret. Tree based ensembles and boosting families such as rf, et, ada, gbr, and the gradient boosting libraries xgboost, lightgbm, and catboost often perform very well on structured tabular data. They are more complex and more sensitive to tuning and data leakage if preprocessing is not handled carefully. Kernel and neighborhood methods such as svm, kr, and knn can model non linear relationships. They can become computationally expensive on large datasets and usually require proper feature scaling.

Time series forecasting models supported in the built-in model library

PyCaret provides a dedicated time series module built around forecasting concepts such as the forecast horizon (fh). It supports sktime compatible estimators. The set of available models depends on the installed libraries and the experiment configuration, so availability can vary across environments.

The table below lists the estimator IDs and model names supported in the built-in time series model library.

| Estimator ID | Model name in PyCaret |

| naive | Naive Forecaster |

| grand_means | Grand Means Forecaster |

| snaive | Seasonal Naive Forecaster |

| polytrend | Polynomial Trend Forecaster |

| arima | ARIMA family of models |

| auto_arima | Auto ARIMA |

| exp_smooth | Exponential Smoothing |

| stlf | STL Forecaster |

| croston | Croston Forecaster |

| ets | ETS |

| theta | Theta Forecaster |

| tbats | TBATS |

| bats | BATS |

| prophet | Prophet Forecaster |

| lr_cds_dt | Linear with Conditional Deseasonalize and Detrending |

| en_cds_dt | Elastic Net with Conditional Deseasonalize and Detrending |

| ridge_cds_dt | Ridge with Conditional Deseasonalize and Detrending |

| lasso_cds_dt | Lasso with Conditional Deseasonalize and Detrending |

| llar_cds_dt | Lasso Least Angle with Conditional Deseasonalize and Detrending |

| br_cds_dt | Bayesian Ridge with Conditional Deseasonalize and Detrending |

| huber_cds_dt | Huber with Conditional Deseasonalize and Detrending |

| omp_cds_dt | Orthogonal Matching Pursuit with Conditional Deseasonalize and Detrending |

| knn_cds_dt | K Neighbors with Conditional Deseasonalize and Detrending |

| dt_cds_dt | Decision Tree with Conditional Deseasonalize and Detrending |

| rf_cds_dt | Random Forest with Conditional Deseasonalize and Detrending |

| et_cds_dt | Extra Trees with Conditional Deseasonalize and Detrending |

| gbr_cds_dt | Gradient Boosting with Conditional Deseasonalize and Detrending |

| ada_cds_dt | AdaBoost with Conditional Deseasonalize and Detrending |

| lightgbm_cds_dt | Light Gradient Boosting with Conditional Deseasonalize and Detrending |

| catboost_cds_dt | CatBoost with Conditional Deseasonalize and Detrending |

Some models support multiple execution backends. An engine parameter can be used to switch between available backends for supported estimators, such as choosing different implementations for auto_arima.

Beyond the built-in library: custom estimators, MLOps hooks, and removed modules

PyCaret is not limited to its built in estimator IDs. You can pass an untrained estimator object as long as it follows the scikit learn style API. The models() function shows what is available in the current environment. The create_model() function returns a trained estimator object. In practice, this means that any scikit learn compatible model can often be managed inside the same training, evaluation, and prediction workflow.

PyCaret also includes experiment tracking hooks. The log_experiment parameter in setup() enables integration with tools such as MLflow, Weights and Biases, and Comet. Setting it to True uses MLflow by default. For deployment workflows, deploy_model() and load_model() are available across modules. These support cloud platforms such as Amazon Web Services, Google Cloud Platform, and Microsoft Azure through platform specific authentication settings.

Earlier versions of PyCaret included modules for NLP and association rule mining. These modules were removed in PyCaret 3. Importing pycaret.nlp or pycaret.arules in current versions results in missing module errors. Access to those features requires PyCaret 2.x. In current versions, the supported surface area is limited to the active modules in PyCaret 3.x.

Conclusion

PyCaret acts as a unified experiment framework rather than a single AutoML system. It standardizes the full machine learning workflow across tasks while remaining transparent and flexible. The consistent lifecycle across modules reduces boilerplate and lowers friction without hiding core decisions. Preprocessing is treated as part of the model, which improves reliability in real deployments. Built-in model libraries provide breadth, while support for custom estimators keeps the framework extensible. Experiment tracking and deployment hooks make it practical for applied work. Overall, PyCaret balances productivity and control, making it suitable for both rapid experimentation and serious production-oriented workflows.

Frequently Asked Questions

A. PyCaret is an experiment framework that standardizes ML workflows and reduces boilerplate, while keeping preprocessing, model comparison, and tuning transparent and user controlled.

A. A PyCaret experiment follows setup, model comparison, training, optional tuning, finalization on full data, and then prediction or deployment using a consistent lifecycle.

A. Yes. Any scikit learn compatible estimator can be integrated into the same training, evaluation, and deployment pipeline alongside built in models.

Login to continue reading and enjoy expert-curated content.

💸 Earn Instantly With This Task

No fees, no waiting — your earnings could be 1 click away.

Start Earning